What is A/B Testing and How Companies Use It

Insights on what works and what doesn’t

Companies building products want feedback from their customers. In fact, customer feedback is the most important factor for a company to build a useful and successful product. If customers don’t like a feature or design of something, it will be in your favour to work on the problem. This is true not just for physical products, but also for digital products like e-commerce websites, social networks and software products.

In the digital landscape, one of the most effective strategies to gain insights into what customers want is A/B testing.

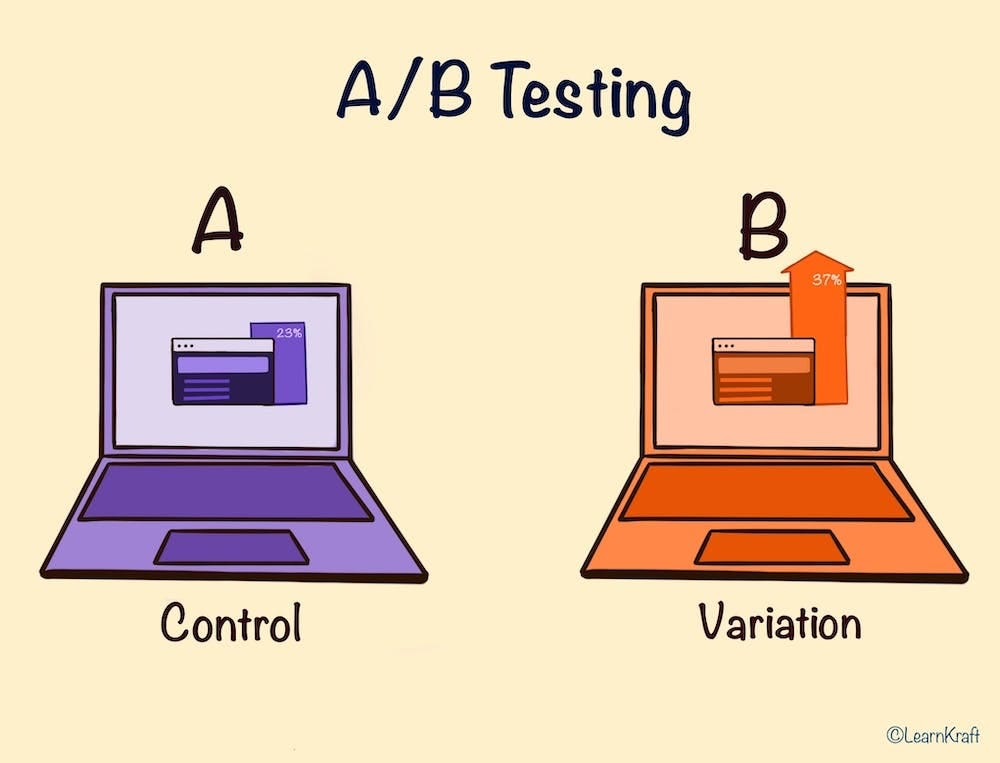

What is A/B Testing?

A/B testing is a technique where a company shows two slightly different versions of a part of the product to users and then measures which one performs better. Basically, it is a race between two versions, A and B, where the winner is decided based on the relevant metrics such as improved conversion, more time spent on the product, etc.

A/B test is a type of controlled experiment. A is the existing version and B is the version that contains the changes. The users are split into two groups. One group is shown version A and the other group is shown version B. And then the performance of each group is measured to see which one performed better. If Group B performs significantly better than Group A, then version B is implemented as the new standard. This would mean rolling out version B to all users. Essentially, version B becomes the new version A.

Where is A/B Testing Applied?

Nowadays A/B testing is applied to almost all areas in digital business. People create A/B tests for a variety of things such as the color of the buy button, email subject lines, ads, and testing new software features. Sometimes the color of the buy button can have an impact on the actual conversions. An A/B test can reveal this. Similarly, one email subject line can have a better open rate than the other.

Typically when people talk about A/B testing, they are referring to digital marketing. But it is equally true for software also. In the software world, it is more commonly referred to as feature flagging and experimentation.

A/B Testing in Software

In a software company, the engineering and product teams are primarily responsible for developing new features. But how do they determine which ones to build and where to focus their resources? One effective strategy is experimentation. In this approach, the team first designs a scaled-down version of the new feature, which is then rolled out to a small segment of their user base. The impact of this feature on the chosen group is carefully measured. If the response is positive, the team proceeds to develop the feature fully and release it to all users. However, if the impact is negative or insignificant, they take the experiment as a learning opportunity. Depending on the insights gained, they may revise and retry the feature, or they could decide to abandon it and work on a different experiment.

Experimentation Example

Imagine you’re a product manager at a SaaS company providing CRM software. You’ve received feedback that customers are not very satisfied with the reporting features. You decide to improve the reporting features by adding new reports and more granularity. Instead of creating a big plan, you start by building a small thing, like a new daily sales report. You select a small user base and launch this new report to this selected user group. The rest of the users will not see this new report.

After running the experiment for some time, you compare the engagement levels and feedback from these two user groups. This could include things like time spent on checking reports, frequency of use, and feedback received. Based on the result of this experiment, the product manager can decide to roll out the new report to all users and further plan to work on the overall reporting feature.

Important Considerations

To run a successful A/B test, there are a few important considerations.

User Selection: The way you select the small user group for testing your new feature is very important. You may select these users randomly, or you might have a selection criterion that's relevant to the feature. Once you've established a selection criterion, you'll need to implement it in your code to ensure that only the selected users have access to this new feature. This implementation is done through a technique called feature flagging. Some companies build this directly into their product, while others use third-party software like LaunchDarkly for this purpose.

Measurement: Just showing the new feature to the selected group is not enough. You also need the capability to measure the impact of this experiment through the data you gather. For instance, for an e-commerce company, the metric could be increased conversion rates. For a social network, it could be increased user engagement. For a SaaS company, it might be higher product usage. Measuring and reporting such impact is a lot of engineering work.

Test Duration and Sample Size: It's important to run the A/B test for an adequate duration and on a substantial enough sample size. This helps minimize the impact of anomalies and ensures the credibility of the results obtained from the test.

Evaluate Performance (Statistical Significance): Once you've started showcasing the new feature to a select user base and you're tracking the appropriate metrics, the next step is to evaluate the performance of your experiment. This evaluation requires some statistical analysis to determine whether the differences observed are statistically significant or merely due to chance. This step ensures the validity of your results and helps avoid false positives.

A/B Testing Examples from Leading Companies

Some of the big tech companies are known to perform multiple A/B tests. Here are a few examples.

Google: This is one of the most cited examples of A/B testing. Google wasn’t able to decide which shade of blue to use in their ad links. They decided to A/B tested 41 different colours. This was a successful test as a result of the winning shade, their yearly income increased by an estimated $200 million!

Airbnb: Airbnb ran A/B tests on their search result layouts. They tested a more prominent design of the price-per-night feature by moving it from the bottom to the results to the top. This resulted in higher user engagement.

Uber: Uber performed an A/B test on their surge pricing feature. They wanted to test if they should display the surge price directly or show it as a multiplier or normal price (like 1.2x). The test results showed that people were more likely to accept surge pricing when the prices were shown directly rather than as a multiplier.

Booking.com: Booking.com used A/B testing to decide whether showing hotel prices with or without tax led to higher conversion rates. They found out that showing the final price (including tax) from the start resulted in a more positive user experience and increased bookings.

Note that all these companies run A/B tests regularly on a variety of features. These are just a few select examples.

I hope this article has offered you a clearer understanding of A/B testing. Until next time!